The Computational Web and the Old AI Switcharoo

For two grand I can buy a laptop with unfathomable levels of computational power. Stock, that laptop comes with silicon made of resistors so impossibly tiny, it operates under different laws of physics. For just a few hundred dollars more, I get a hard drive that stores more documents than I can write if I wrote 24 hours a day for the rest of my life. It fits in my pocket. If I'm worried about losing those documents, or if I want them synced across all my devices, I pay Apple for iCloud. Begrudgingly.

If I were on a budget, any two-hundred-dollar laptop and a twenty-dollar USB, could handle the computational load required for the drivel I pour daily into plain text files (shout / out / to / .txt).

Yet, the average note-taking app charges ten bucks per month in perpetuity. It stores my writings in proprietary file formats that lock me into the app. In exchange, I get access to compute located 300 miles away, storage I don't need, and sync-and-share capabilities that I already pay for. Now, I can also expect a 20% hike on all my subscriptions for BETA-level AI solutions desperately searching for a problem to solve.

Welcome to the Computational Web.

I define the “Computational Web” by the increasingly gargantuan levels of computational power (compute) required to run the modern Internet, enacted by a small group of firms uniquely positioned to meet those demands.

The Computational Web is the commodification of computational power. The Computational Web marks the achievement of absolute control over the modern technology stack. The Computational Web signals a future where all of our personal computers devolve into mere cloud portals. These devices would be sleek, and thin, and inexpensive, and incapable of answering "how many 'R's are in the word strawberry” without an internet connection (and maybe not even then).

The cloud used to be the place we stored our files as backups, and kept our devices synchronized.

But increasingly, we are seeing the cloud takeover everything our computers are capable of doing. Tasks once handled by our MacBook's CPUs and GPUs are being sent to an edge server to finish. No product on the market facilitates this process better than cloud-based AI solutions.

Aside: (I think) Sam Altman fundamentally misunderstood the role his product plays in the Computational Web. Now he's scrambling, begging companies with infrastructure for more compute.

Artificial intelligence, manifested in Chatbots and agents, isn't the product. The product is the trillion-dollar data center kingdoms required to power those bots. ChatGPT might be OpenAI's Ford F150, but datacenters are Microsoft's gasoline. Without Microsoft's infrastructure, ChatGPT is a $500 billion paperweight. I don't know when Sam Altman realized AI is just a means to sell retail compute to the masses. Probably just before the ink dried on the pair's partnership agreement.

Compute is expensive and difficult to scale. AI is the most compute-hungry consumer technology in the history of the web. So, shoehorning AI features into our apps isn't just tech bros following their tail. It's setting the expectation that all consumer technology requires AI. If all technology requires AI, and only a handful of companies are equipped to handle the computational load that AI requires, then compute itself becomes a moat too deep for competition to enter, and consumers to flee from.

Compute is a scarce resource, turning the tech industry into a cloud-oligopoly (Google, Amazon, Microsoft, and Meta (GAMM)). Our devices—laptops, desktops, phones—have grown dependent on the cloud, not just for storage, but to complete the types of tasks that our devices are largely capable of handling.

Our level of dependency on cloud-computing has made, by and large, local-computing redundant. Something has got to go. Can you guess which it'll be?

An interesting side effect of late-stage capitalism is the gained ability to forecast business strategies. Some in the blogosphere loathe this type of navel-gazing, but I find it fun. Because all you must do to extrapolate big tech's strategies is realize that morality, ethics, and sometimes even the law, are not considerations when one develops a “corner the market“ business plan. It's Murphy's Law but for big tech. If it's possible and profitable; if it causes dependency and monopolies, then that's the plan. Competition is for losers, after all.

The ol’ switcharoo #

Each iteration of the web starts with a promise to the people, and ends with that promise broken, and more money in the pockets of the folks making the promises.

Through the early nineties, the “proto-web” promised us a non-commercial, interconnected system for cataloging the world’s academic knowledge. We spent millions in tax dollars building out the Internet’s infrastructure. The proto-web then ended with the Telecommunications Act of 1996— the wholesale redistribution of the people’s internet to the American Fortune 500.

Web 1.0, or the “Static Web,” promised a democratization of information, and the ability to order your Pizza Hut on the Net. The Static web ended with the dot-com bubble.

Web 2.0, or the “Social Web,” promised to connect the world, even if that meant people dying. So we gave them our contact info and placed their JavaScript snippets into our websites. The result of Web 2.0, an era that ended around 2020, is the platform era: techno-oligarchs and fascists who control all of our communication infrastructure and use black-box algorithms to keep us on-platform for as long as possible.

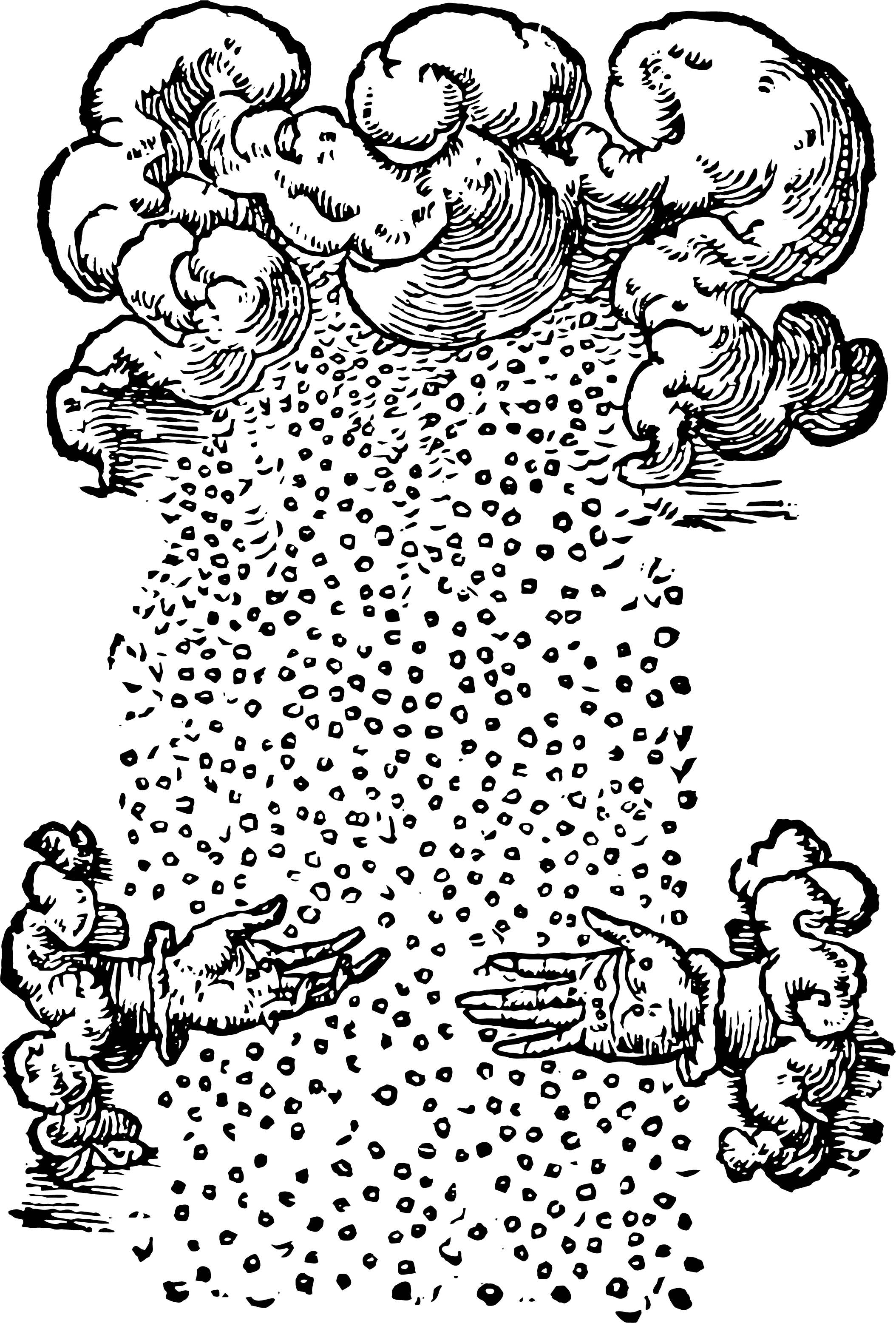

We are halfway into Web 3.0. The Computational Web has tossed a lot of hefty promises into that Trojan Horse we call AI—ending world hunger, poverty, and global warming just to name a few. But this is for all the marbles. Promises of utopia are not enough. They must scared the shit out of us, too, by implying that AI in the wrong hands can bring about a literal apocalypse.

So, how will Web 3.0 end?

On New Year's, I made a couple of silly tech predictions for 2026 (because it's fun guessing what our tech overlords will do to become a literal Prometheus).  The first prediction is innocuous enough. I think personal website URLs will become a status symbol on social media bios for mainstream content creators. Linktrees are out. Funky blogs with GIFs and neon typography are in. God, how I hope this one happens. Not even for nostalgia. In the hyper-scaled, for-profit web, personal websites are an act of defiance. It's subversive. It's punk.

My second 2026 prediction, something, for the record, I do not hope happens, is an attack on local computing. We'll see a mainstream politician and/or tech elite call for outlawing local computing. This is big tech's end goal—position AI (LLM, agentic, or whatever buzzword of the time) as critical infrastructure needed to run our software, leverage fear tactics into regulatory capture, then, the long game is to work towards a cloud-tethered world where local compute is a thing of the past. Thin clients with a hefty egress invoice each month. Google, Amazon, Microsoft, and Meta (GAMM) will become the Comcast of computational power. (Not all of this is happening in 2026. I kind of went off the rails a bit.)

To get that ball rolling, all big tech needs is a picture of a brown man next to a group of daisy-chained Mac Minis, and the headline AI-assisted Terrorist Attack.